My website is not being indexed! Is it because the internet is full?

Latest status update (2025-09-13): Linux voor een beginner is still NOT indexed

A crazy thought: the internet might be full!

This story begins after my small new blog is poorly indexed by both Google and Bing. In February (2025) I registered a new domain vooreenbeginner.nl, which is Dutch for “for a beginner”. The idea was to create micro-websites under this domain, such as Linux voor een beginner, focused on just Linux and open source.

As I maintain more websites, I performed the usual steps like creating a sitemap, validating the website with Google Search Console and Bing Webmaster Tools. After the validation, the next step was adding the sitemap, so both search engines could go ahead and start crawling the website. It did not take long before both did some crawling and pages showed up in the index. So all is good, right? Well, not really.

Pages dropping out of the index

After a few months, in April and May, the number of pages did start to drop. Strange, but I initially thought it might be related to the domain being fresh. Maybe Google gave me first some extra chance to shine in the first months, then started to be a little bit more picky. In the meantime, I continued creating new pages, ignoring the issue for a while.

While now and then monitoring the situation, I saw more and more pages dropping out of the Google index. Bing was not much better, but with my experience that usually Bing was not indexing as many pages as Google, I didn’t think much of it. My worry started when my website was really gone from the index.

Initial thoughts on the indexing problem

My first thought was that some change negatively influenced the index state of all pages. Since it applied to the full website, including the homepage, it had to be in the template. At the same time I would not expect that, as the same template is used on some of my other websites. But then again, I did make some changes to improve readability and to improve the top menu. Did I break something?

Some of my websites are created using the amazing Hugo. Once upon a time I used a bare bone template, but customized it such in a way that there is almost no character left from the initial template. Since the template powers my other blog Linux Audit, I knew it worked, resulted in pretty clean HTML code, and most importantly in a website that was light and easy to navigate. So in this area I could not find anything suspicious that would result in delisting from the search engine indexes.

The webmaster tools from Google and Bing provide good overall indicators when it comes to indexing. It covers issues like slow pages, using a ’noindex’, or having server errors. I started going through both, but did not see anything that directly would result in a de-indexed website. Time to consult others seeing if they had a clue or a hint. I shared some ideas with Jos Klever (thanks Jos!) and tried some of suggestions provided by the great people responding to my Mastodon thread.

Making small changes

Along the way I made a bunch of small changes to the templates and fixing some issues, like the top navigation menu not being fully mobile-friendly. I know search engines like websites to be mobile-friendly, but at the same time they didn’t complain about it. Also the meta properties in the head tag where cleaned up. I was pretty sure all these little things would not suddenly resolve the problem, especially considering the average website is full with minor and major defects.

I started to document the changes. This way I had some notes that if my website was indexed again, that I had at least an idea of what could have been the change. But weeks passed, and nothing happened.

So if you are wondering about these changes, the include things like adding a favicon, updating the footer, adding a missing alt text on a generic icon. Small things, nothing that really hugely impacts the website or its usage.

The first hint? Using IndexNow

On another blog I implemented IndexNow. It’s a great way to inform search engines like Bing that some of the content was updated. As I did make small changes to the website, I thought it could be beneficial to let the search engines know. It allows them to directly do a new assessment and decide if it is worthy now to put (back) in its index. The nice thing with IndexNow is that you just have to inform a single endpoint. By collaboration the search engines that use it will share the update. Looking at the log files you can see how it works. Just minutes after submitting an update, several search engines, like Bing, Yandex, and Seznam, will visit the updated page.

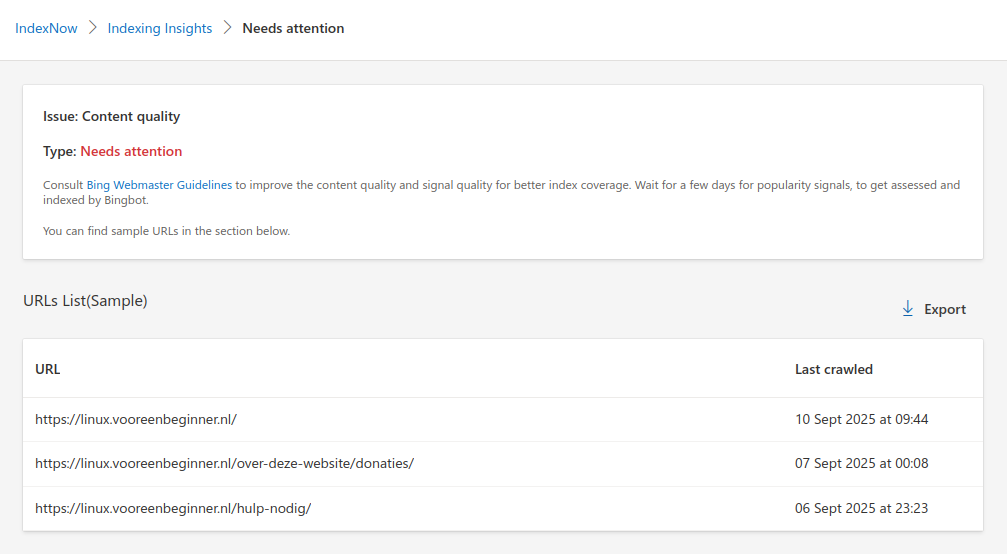

By implementing IndexNow I got an interesting hint: “Issue: Content Quality”. So is it an content issue after all?

Quality issue on the homepage?

Researching the content quality issue

My blog posts are original work and no AI is involved. So the chance that the content is too generic or looking suspiciously similar to other websites is small. But obviously that doesn’t mean my articles are good in any way. For that we need a little bit more, like focusing on readability. When I write articles, I aim for easy words and short sentences. Paragraphs shouldn’t be too longer either.

So what could possibly be wrong about my articles? Also, does really the full website have a content quality issue? That doesn’t make sense. Now IndexNow shows that the homepage is impacted as well. That’s strange, as it contains an explanation about what the website is, including the author. After all, Google promotes the usage of E-A-T in its Google Search Quality Evaluator Guidelines. In case you are wondering, E-A-T is short for Expertise, Authoritativeness, and Trustworthiness. So including some background information about the author should actually help in this area.

When checking out the Bing Webmaster Guidelines several times, I could not really see what could be possibly the cause that the homepage and other pages are flagged. After all, I got the sitemap, robots.txt, IndexNow, and do use normal links. The HTML is properly defined and checked with the W3C validator. The server is quick and the log files are clean, showing (almost) no errors.

Going for an extensive audit

So with no real actionable hints from Bing’s webmaster guidelines, I checked some SEO tools that can do an audit. Someone tipped to use a free account on Ahrefs, so I did. On top of that I used a few others. Besides the usual “title too long” or “title too short”, there were close to none big actions. They were all in line with the smaller changes I already applied.

The good thing of using different external tools is that you can really can be sure you didn’t make an obvious mistake and simply overlook it. After all, when seeing the same code over and over, you may overlook the obvious. So although I improved the website a bit further, I can’t say that I found something that would directly make me think that I solved this puzzle.

Some thoughts

As you probably can imagine by now, I had this indexing issue on my mind. So let me share some random thoughts about the cause.

Generating too much new content?

When I started with the blog, I tried to create a small article a day. Especially since it’s about an introduction to Linux, the articles were fairly easy to create. I just tried to write down how I would explain it to a new user. Did I create too much content in a short amount of time?

Too much optimization?

Maybe is my website too much optimized and giving bad vibes to the search engines? Hugo websites (with the right template) are insanely small and fast. Did I over-optimize it? Probably not, otherwise my other websites would have had the same issue.

Website not mature?

Maybe because it’s a new domain, the search engines don’t trust it (yet)? Not much that I can do, except promoting the initiative.

What about using a subdomain?

Google doesn’t mind about the usage of subdomains. Strictly speaking my website is not on a subdomain, it’s just a host named ’linux’ within the domain. But, I had no homepage on the domain (vooreenbeginner.nl) itself. Maybe that is unexpected? I created a simple page initially and put it up. To my surprise that one was actually indexed!

Maybe I promoted the website incorrectly?

I’m no longer active on Twitter/X and definitely not on Facebook. So I revealed my project on Mastodon. The good thing about this platform is that there is a nice bunch of people. They appreciated the efforts and shared it with their followers. With Mastodon being decentrally hosted, you get suddenly a spike of incoming links, as they come from multiple servers. The downside is that it may trigger the search engine in seeing duplicate messages with a link to my website. Is this considered to be spammy? Not much that I can do about that obviously. It’s simply the nature of decentral hosted social media.

Missing incoming links?

Most search engines love it when links are pointing to your website or blog. It gives them an idea on attribution and possibly a hint of good quality content to look for. Getting incoming links is hard though. It takes time and it takes some promotion. On my Linux initiative I do ask people to link to my website if they like the it. It’s a great way to donate to the initiative and at the same time promote it.

I’m wondering if I get more incoming links, if this would resolve the indexing issue. Maybe you need just enough incoming links to get recognized? That would be great. Getting indexed would be also great, so people can discover the blog in the first place, so that they can link to it *wink*.

Checklist for indexing issues

To combat the indexing issue, I read multiple websites. Many include the generic solutions like “just wait” or “write more content”. So this checklist below is my attempt to help anyone with indexing issues.

- Content quality

- Content provides an insight or solution to a problem

- No filler text that does not add any value

- Grammar checked

- Readability

- Paragraphs not too long

- Number of words per line

- Indexing

- Sitemap file correctness

- Sitemap file updated on a regular basis

- IndexNow implemented

- Links

- Is the website getting incoming links?

- Are the outgoing links to healthy websites?

- Internal links to create an interconnected web of pages

- Are incoming links from bad hosts?

- HTML quality

- Test with W3C validator

- Meta tags defined

- Semantic HTML used?

- body

- header

- footer

- Schema.org markup

- Text surrounded with <article> tag

- Robots.txt

- Is it present?

- Validated that regular pages are not being blocked?

- No search engines blocked?

- Is simplification possible?

- Authoritativeness

- Is the content written by the person with the right expertise?

- Is the author mentioned on the page?

- Is there an about page?

- Crawling

- Can the search engines reach your server each time?

- Is the performance good

- Does Google state there were DNS issues

- Log files

- Are there many errors in the log file?

- Are the search engines within the log file?

- Is robots.txt and the sitemap downloaded?

Anything else that should be part of this checklist?

What if: there is NOT a problem with my website

Is it possible that the problem is not within my website, but how the web works nowadays? I’m starting to believe that we are ruining our precious internet with AI slop. Open source developers like Daniel Stenberg from the curl project took an active stance on the crappy AI-generated issue reports. I see in my log files that the crawlers from AI companies happily traverse through my website and content. Maybe they are overwhelming Google and Microsoft as well?

Normally if you create a new website, it takes a while to set things up and especially creating the content for it. With a little bit of automation you could now register a domain, set up its configuration, and completely fill it with auto-generated AI garbage.

For many years people rigged the SEO game, trying to compete for the first spot. Google took measures, including penalties for domains that showed signs of unethical behavior. At the same time, the results of Google did not become of higher quality. I liked it in the early days and I could always find an answer to my technical problems or that specific product I needed. But that time is gone for some years now. The first page is filled with advertisements, but surprisingly the second and following pages are not much better. Is it possible that Google is overwhelmed?

Is the internet full?

I’m starting to believe that the internet is full. Well, maybe I should say the web. There are so many domains, websites, and content, that companies including Google can no longer properly track it. Now with AI they get an even bigger challenge to sort through all the auto-generated crap. That leaves them less time on spending on those smaller niche blogs like mine, especially because it is in a silly non-English language!

So we might have reached a moment in time that it is no longer possible to get a real clear image on what the internet or web is. A tipping point in where new websites will get it really difficult to be ranked.

What do you think?

SEO challenge: find the cause

Are you up for a challenge? Maybe I’m plain wrong or I simply screwed up. I encourage you to look at the website and its code. If you can find the real cause that resolves this indexing issue, you get a prominent place in this article.